Last updated on January 7, 2026

Machine learning (ML) and generative AI have rapidly lowered the technical bar for sophisticated attacks: easier access to large language models, open-source ML tooling, and on-demand compute mean attackers can prototype scams and evasion techniques faster than before. Government and industry reports highlight that AI is now a material factor in criminal tactics and that defenders must adapt.

What Is ML-Powered Cybercrime, and Why It Matters Now

Definition: Use of ML/AI by adversaries to improve reconnaissance, craft more convincing social engineering, evade detection, guess credentials, and automate operations.

Why now: Pre-trained LLMs, widely available voice/video synthesis, and affordable compute allow attackers to do at scale what once required specialists, creating higher-volume, higher-quality attacks.

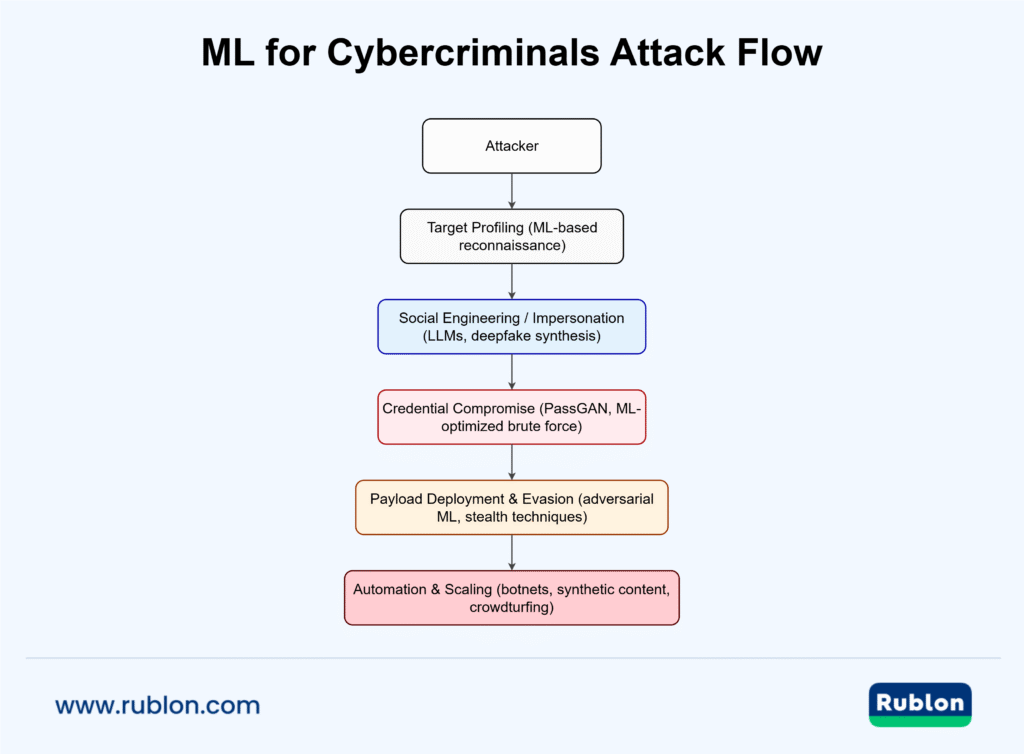

The Attack Lifecycle — Where ML Typically Fits

- Reconnaissance / Information Gathering: ML helps classify targets, extract likely weak points from public data, and prioritize victims.

- Impersonation / Social Engineering: LLMs and generative models craft personalized phishing and deepfakes for voice/video social engineering.

- Credential compromise / Unauthorized access: ML is used for smarter password guessing and CAPTCHA bypass research.

- Attack / Payload Delivery: adversarial ML enables evasion of ML-based detectors and generation of more plausible malicious content.

- Automation / Post-exploitation: ML-driven automation (botnets, crowdturfing) scales impact and reduces human labor.

Reconnaissance / Information Gathering

- ML models can classify harvested social media, job posts, and corporate signals to find high-value targets or employees with access.

- Clustering/classifiers (topic models, embeddings), LLM prompt pipelines that summarize public footprints into attackable profiles.

- Prioritizes targets for manual follow-up or automated campaigns, reducing wasted effort and increasing success rates.

Impersonation / Social Engineering

- LLMs produce tailored phishing/spear-phishing copy at scale and can produce follow-ups; voice/video deepfakes amplify social engineering (vishing/video-call fraud).

- Real incidents: high-profile deepfake scams and attempted CEO impersonations show practical use of cloned voices and synthetic video in fraud.

Credential Compromise / Unauthorized Access

- Generative models like PassGAN show ML can produce plausible password guesses that complement traditional cracking rules; reinforcement or generative models can accelerate credential-guess lists.

- Attackers may also use ML to optimize brute-force sequences and adapt guessing strategies from leaked breach datasets.

Mitigate ML hacking. Sign up for a Free 30-Day Rublon Trial →

Attack / Payload Delivery

- Adversarial techniques (e.g., MalGAN) demonstrate how ML can craft payloads or inputs designed to evade ML-based detectors (antivirus or other classifier systems).

- Attackers can combine social engineering (LLM) with adversarial payloads to both deliver and hide malware, increasing persistence chances.

Automation / Post-Exploitation

- Hivenet-style botnets, automated crowdturfing, and AI-driven review generation let attackers monetize at scale (e.g., fake reviews, coordinated reputation attacks).

- Automation reduces operator costs and increases campaign speed; however, orchestration quality still matters (quality vs. quantity tradeoff).

Techniques & Examples

- Phishing / LLMs: automated spear-phishing using Markov/NN/LLM text generation; Black Hat demos show high click rates in experiments.

- Deepfakes: voice-cloning fraud (CEO voice scam, 2019) and 2024–25 high-profile deepfake attempts against executives.

- Adversarial ML / MalGAN: GAN-based methods to make malware evade black-box detectors.

- PassGAN / credential models: GANs trained on breach leaks to produce realistic password guesses.

- Crowdturfing / fake content: generative AI for content/review creation and bot-run micro-interactions.

Challenges & Constraints for Attackers

- Data & cost: realistic model training needs data (breach dumps, voice samples) and compute, but cloud credits/leases lower the entry cost.

- Model limitations: overfitting, poor generalization, and transferability limits mean some ML attacks require tailoring.

- Detectability & defenses: anomaly detection, watermarking, and adversarial defenses raise detection costs for attackers.

Defensive Strategies & Mitigations

- Use ML for defense: deploy anomaly detection, EDR/behavioral baselines, and AI-assisted threat hunting to raise detection rates.

- Adversarial ML defenses: adopt robust modeling, input sanitization, and continuous model validation to reduce evasion risk.

- Operational controls: multi-factor authentication (MFA), privileged-access management, strict segmentation, and phishing-resistant authentication remain high-value controls.

People & process: targeted user training, red-teaming with synthetic adversarial content, and logging + rapid response improve resilience.

Looking for a FIDO MFA Provider?

Protect Active Directory and Entra ID users from hackers with phishing-resistant FIDO security keys and passkeys.

Trends & Outlook

- Fusion AI + threat intel: automated model-assisted threat hunting and rapid extraction of IOC patterns from streaming telemetry.

Federated & synthetic data: attackers and defenders both use synthetic/federated data to train models while avoiding attribution or leaks.

Dual-use generative AI: generative systems will be simultaneously the best attack enabler and a core defensive tool; governance, explainability, and model-hardening will be critical.

Immediate Checklist (3-step):

- Enforce MFA for all privileged access.

- Centralize logging/EDR and create alerts for anomalous content or unexpected file uploads.

- Conduct targeted red-team simulations using generative phishing and voice deepfakes.

Conclusion

ML is not a magic wand for attackers, but it is a force multiplier — enabling faster reconnaissance, more convincing impersonation, and scalable automation. Defensive priorities are simple and concrete: harden auth (MFA), apply behavioral detection and EDR, segment networks, centralize logging, run AI-aware red teams, and keep patching and vendor controls tight.